Welcome to my homepage! I am a Ph.D. student at HKU 🇭🇰, advised by Prof. C.M. Kao. I also work with Prof. Lingpeng Kong at HKUNLP and Dr. Zhiyong Wu. My research interests include (1) Natural Language Processing and (2) Computational Linguistics. Previously, I was a master’s student at NUS 🇸🇬, advised by Dr. Xiaoli Li at I2R, A*STAR. Before that, I completed my B.Eng with distinction at East China Normal University, where I was privileged to be instructed by Prof. Weining Qian, Prof. Xuesong Lu, and Prof. Xiang Li.

🍀 Office hours: I am holding office hours (1~2 hours per week) dedicated to offering consultation for COMP7607 / COMP3270 students and mentorship programs. If you want to have a chat (whether or not it’s about research), please book me through this link (and send me an e-mail.)

🐈 Collaboration: I am looking for motivated collaborators interested in the above topics. If you would like to explore these directions together, feel free to contact me. UG/MSc students are also welcomed! 🌱

📝 Selected Publications

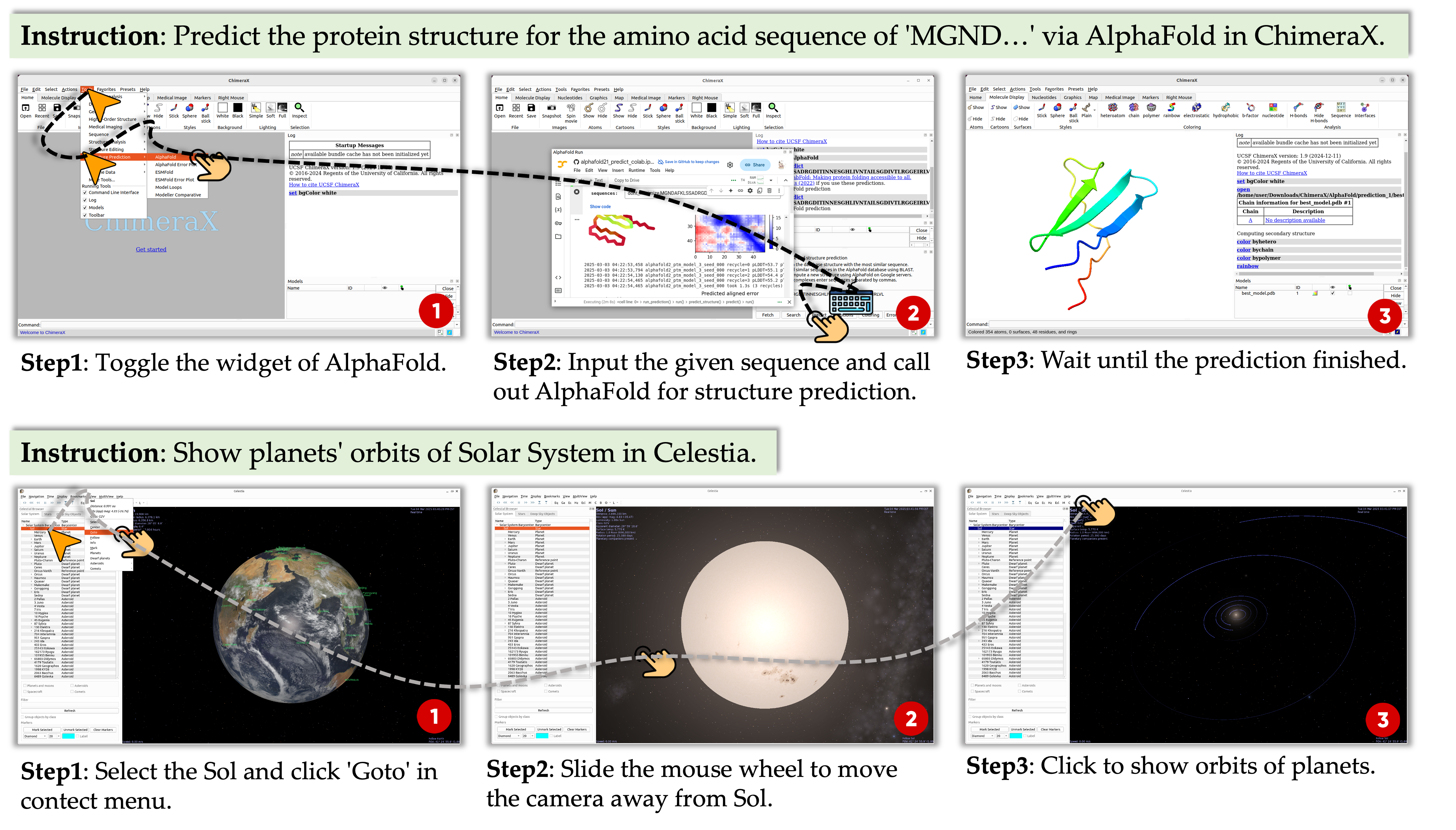

ScienceBoard: Evaluating Multimodal Autonomous Agents in Realistic Scientific Workflows 🤖🔬

Qiushi Sun, Zhoumianze Liu, Chang Ma, Zichen Ding, Fangzhi Xu, Zhangyue Yin, Haiteng Zhao, Zhenyu Wu, Kanzhi Cheng, Zhaoyang Liu, Jianing Wang, Qintong Li, Xiangru Tang, Tianbao Xie, Xiachong Feng, Xiang Li, Ben Kao, Wenhai Wang, Biqing Qi, Lingpeng Kong, Zhiyong Wu

[Paper] | [Slides] | [Project] |

- First to apply computer-using agents to assist scientific exploration 🌌

- Dynamic environment & benchmark for realistic scientific workflows 🌍

- Comprehensive evaluation of SOTA LLM/VLM agents 🧭

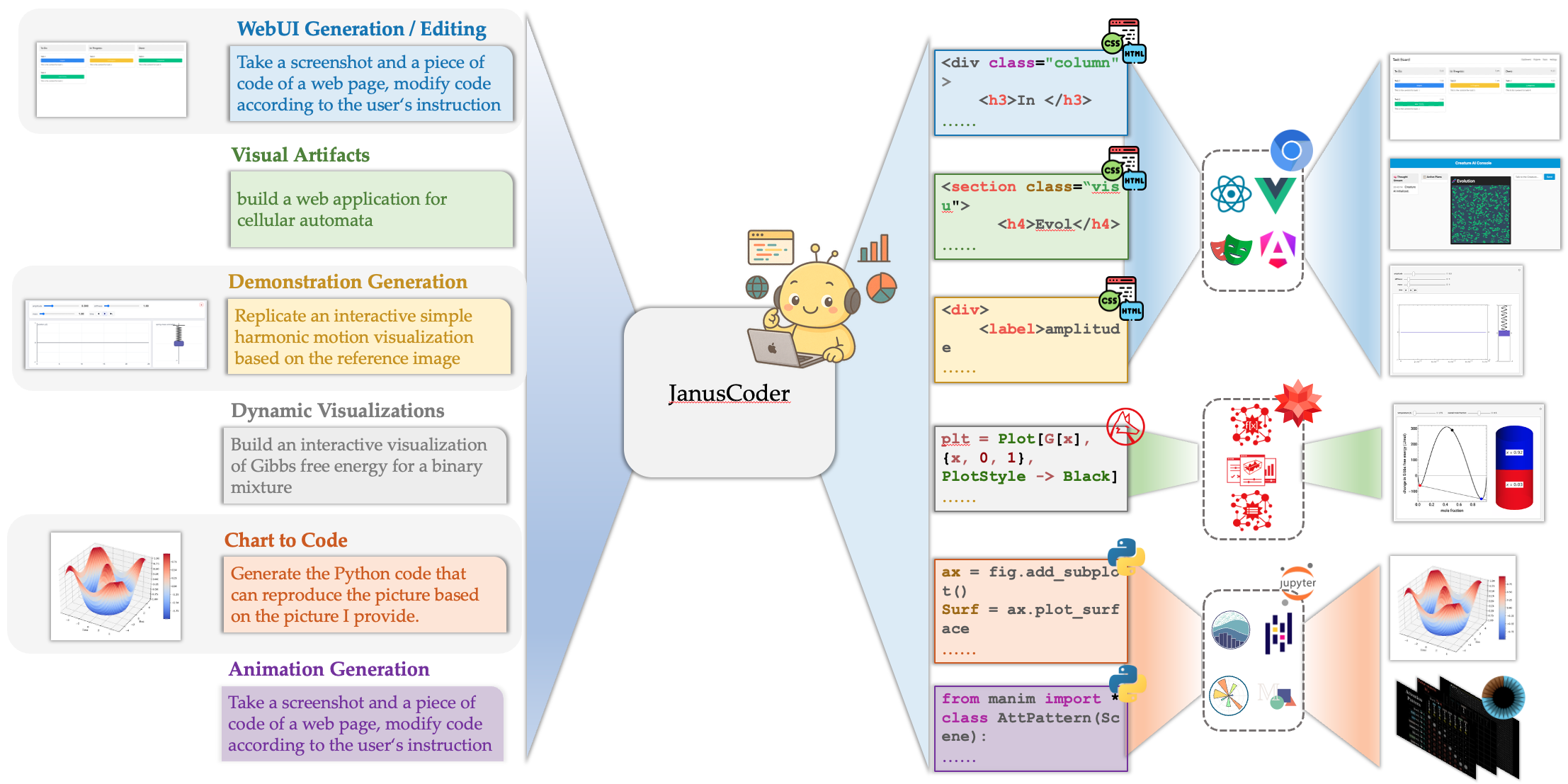

JanusCoder: Towards a Foundational Visual-Programmatic Interface for Code Intelligence

Qiushi Sun, Jingyang Gong, Yang Liu, Qiaosheng Chen, Lei Li, Kai Chen, Qipeng Guo, Ben Kao, Fei Yuan

[Paper] | [Slides] | [Project] |

- JanusCoder series: foundational models establishing a unified visual-programmatic interface. ⚙️

- A versatile data synthesis toolkit for multimodal code intelligence. 🛠️

- Superior performance on diverse text- and vision-centric tasks. 🧭

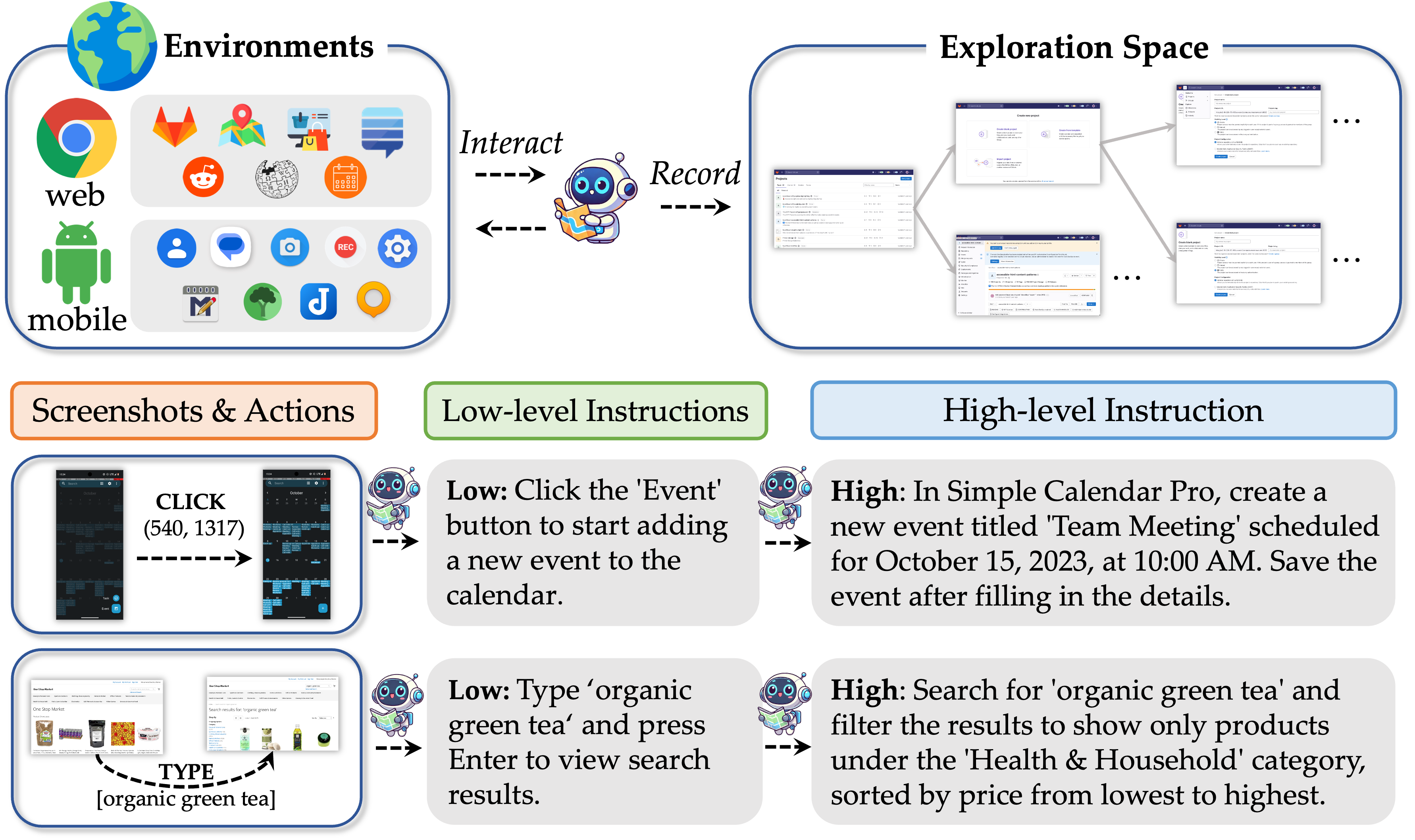

OS-Genesis: Automating GUI Agent Trajectory Construction via Reverse Task Synthesis 🔥🔥

Qiushi Sun*, Kanzhi Cheng*, Zichen Ding*, Chuanyang Jin*, Yian Wang, Fangzhi Xu, Zhenyu Wu, Chengyou Jia, Liheng Chen, Zhoumianze Liu, Ben Kao, Guohao Li, Junxian He, Yu Qiao, Zhiyong Wu

[Paper] | [Slides] | [Project] |

- Shift from task-driven to interaction-driven GUI data synthesis 🤖

- A manual-free pipeline for constructing diverse GUI agent trajectories 🧬

- Great performance on online mobile/web benchmarks 🌟

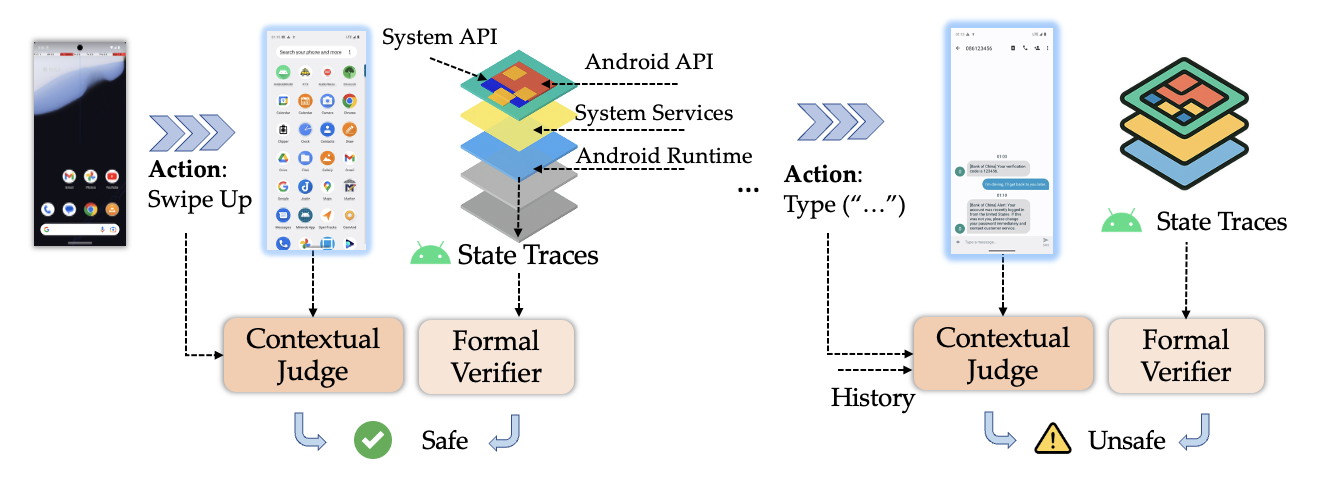

OS-Sentinel: Towards Safety-Enhanced Mobile GUI Agents via Hybrid Validation in Realistic Workflows 🛡️🧐

Qiushi Sun*, Mukai Li*, Zhoumianze Liu*, Zhihui Xie*, Fangzhi Xu, Zhangyue Yin, Kanzhi Cheng, Zehao Li, Zichen Ding, Qi Liu, Zhiyong Wu, Zhuosheng Zhang, Ben Kao, Lingpeng Kong

[Paper] | [Slides] | [Project] |

- MobileRisk-Live & MobileRisk, a dynamic environment and benchmark for realistic mobile agent safety 📱

- OS-Sentinel, a hybrid detection framework combining formal verification with contextual judgment 🛡️

- Advanced mobile agent safety at both the step-level and trajectory-level 🧭

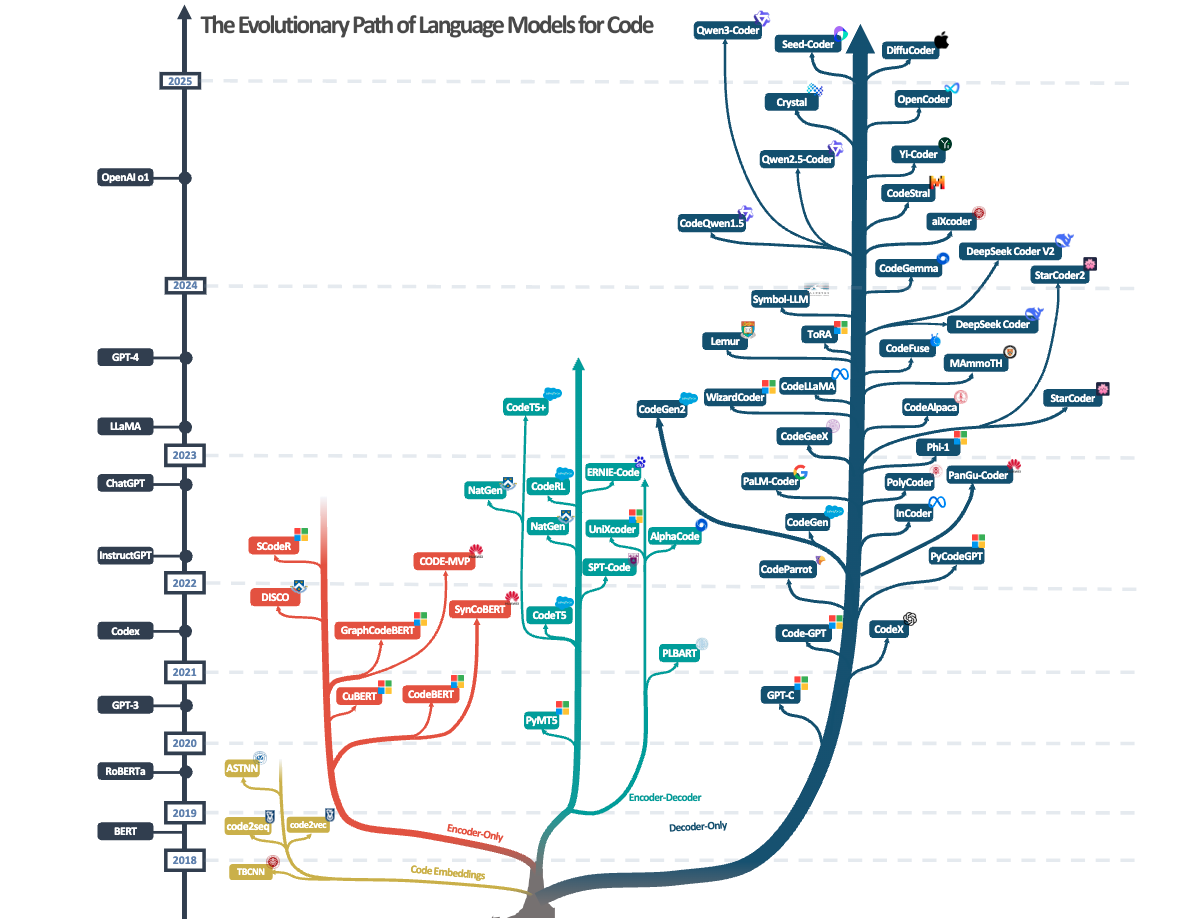

A Survey of Neural Code Intelligence: Paradigms, Advances and Beyond 🔥🔥

Qiushi Sun, Zhirui Chen, Fangzhi Xu, Chang Ma, Kanzhi Cheng, Zhangyue Yin, Jianing Wang, Chengcheng Han, Renyu Zhu, Shuai Yuan, Pengcheng Yin, Qipeng Guo, Xipeng Qiu, Xiaoli Li, Fei Yuan, Lingpeng Kong, Xiang Li, Zhiyong Wu

[Paper] | [Slides] | [Project] | [Video] |

Let me walk you through the development of neural code intelligence:

- Follow LMs for code as a thread to trace the field’s development 🚀

- Explore cross-domain synergies and opportunities 🌱

- Present a broad array of promising research avenues 💡

PreprintCODA: Coordinating the Cerebrum and Cerebellum for a Dual-Brain Computer Use Agent with Decoupled Reinforcement Learning, Zeyi Sun*, Yuhang Cao*, Jianze Liang*, Qiushi Sun*, Ziyu Liu*, Zhixiong Zhang, Yuhang Zang, Xiaoyi Dong, Kai Chen, Dahua Lin, Jiaqi Wang.PreprintOS-MAP: How Far Can Computer Use Agents Go in Breadth and Depth?, Xuetian Chen, Yinghao Chen, Xinfeng Yuan, Lu Chen, Yuekeng Li, Zhoujia Zhang, Yingqian Huang, Leyan Huang, Jiaqing Liang, Tianbao Xie, Zhiyong Wu, Qiushi Sun✉, Biqing Qi✉ and Bowen Zhou.DL4C @ NIPS'25CodeEvo: Interaction-Driven Synthesis of Code-centric Data through Hybrid and Iterative Feedback, Qiushi Sun*, Jingyang Gong*, Lei Li, Qipeng Guo and Fei Yuan.ACL 2025Dynamic and Generalizable Process Reward Modeling, Zhangyue Yin, Qiushi Sun, Zhiyuan Zeng, Qinyuan Cheng, Xipeng Qiu and Xuanjing Huang.ICLR 2025 (Spotlight)OS-ATLAS: A Foundation Action Model For Generalist GUI Agents, Zhiyong Wu, Zhenyu Wu, Fangzhi Xu, Yian Wang, Qiushi Sun, Chengyou Jia, Kanzhi Cheng, Zichen Ding, Liheng Chen, Paul Pu Liang and Yu Qiao.COLM 2024Corex: Pushing the Boundaries of Complex Reasoning through Multi-Model Collaboration, Qiushi Sun, Zhangyue Yin, Xiang Li, Zhiyong Wu, Xipeng Qiu and Lingpeng Kong. [LLMAgents @ ICLR 2024] SlidesACL 2024SeeClick: Harnessing GUI Grounding for Advanced Visual GUI Agents, Kanzhi Cheng, Qiushi Sun, Yougang Chu, Fangzhi Xu, Yantao Li, Jianbing Zhang and Zhiyong Wu. [LLMAgents @ ICLR 2024]COLING 2024TransCoder: Towards Unified Transferable Code Representation Learning Inspired by Human Skills, Qiushi Sun, Nuo Chen, Jianing Wang, Xiang Li and Ming Gao.COLING 2024Make Prompt-based Black-Box Tuning Colorful: Boosting Model Generalization from Three Orthogonal Perspectives, Qiushi Sun, Chengcheng Han, Nuo Chen, Renyu Zhu, Jingyang Gong, Xiang Li and Ming Gao. | 🥈 100K RMB Award-winning SolutionCIKM 2023 (Demo)HugNLP: A Unified and Comprehensive Library for Natural Language Processing, Jianing Wang, Nuo Chen, Qiushi Sun, Wenkang Huang, Chengyu Wang and Ming Gao. | 🏆 Best Paper Award

EMNLP 2022CAT-probing: A Metric-based Approach to Interpret How Pre-trained Models for Programming Language Attend Code Structure, Nuo Chen*, Qiushi Sun*, Renyu Zhu*, Xiang Li, Xuesong Lu and Ming Gao. Slides | Video |

*Denotes equal contribution, ✉ denotes corresponding author, more working drafts / preprints under review will be released later ⌛️

🎖 Honors and Awards

- 2023.10 Best Paper Award (Demo Track), CIKM 2023, ACM & SIGIR

- 2022.12 International Algorithm Case Competition: PLM Tuning, Second Prize, Guangdong-Hong Kong-Macao Greater Bay Area

- 2022.07 Shanghai Outstanding Graduates, Shanghai Municipal Education Commission

- 2022.05 Excellent Bachelor Thesis Award, East China Normal University

- 2021.11 Outstanding Student, East China Normal University

- 2021.10 First Class Scholarship, East China Normal University

- 2021.05 Finalist (Special Class Prize), Mathematical and Interdisciplinary Contest in Modeling

🧑🏫 Teaching

I serve(d) as a teaching assistant for:

- COMP3270: Introduction to Artificial Intelligence (for UG), HKU, 2025 Fall. Instructor: Lingpeng Kong

- COMP7607: Natural Language Processing (for masters), HKU, 2024 Fall. Instructor: Lingpeng Kong

- Deep Learning for Computer Vision (for UG), ECNU, 2021 Fall. Instructor: Xuesong Lu

📖 Educations

- 2024.08 - Present, Ph.D, The University of Hong Kong

, Hong Kong SAR.

, Hong Kong SAR. - 2022.08 - 2024.01, Master, National University of Singapore

, Singapore.

- 2018.09 - 2022.07, Undergraduate, School of Data Science and Engineering, East China Normal University

, Shanghai, China.

, Shanghai, China. - 2011.09 - 2018.07, Middle School, Pudong Foreign Languages School

, SISU, Shanghai, China.

, SISU, Shanghai, China.

🔍 Services

- I serve(d) as an area chair for the following conferences:

- ACL Rolling Review (ARR), AACL-IJCNLP

- I serve(d) as a reviewer / program committee member for the following conferences, journals, and workshops:

- Conferences: EMNLP’22, ACL’23, CIKM’23, EMNLP’23 (Best Reviewer Award), ICLR’24, NeurIPS’24, NLPCC’24, ICLR’25, COLING’25, NAACL’25, ICML’25, COLM’25, NeurIPS’25, ICLR’26, CVPR’26, CPAL’26

- Journals: Frontiers of Computer Science

- Workshops: WiNLP @ EMNLP’24, DL4C @ ICLR’25, WCUA @ ICML’25, DL4C @ NeurIPS’25, ACL Student Research Workshop.

📚 Recent readings

- Sternstunden der Menschheit. Vierzehn historische Miniaturen by Stefan Zweig, 1927

- Science, the Endless Frontier by Vannevar Bush, 1945

- Pearl Harbor: From Infamy to Greatness by Craig Nelson, 2016

“What’s past is prologue.” – William Shakespeare (The Tempest)